|

I am currently a second-year master student at Beihang University, advised by Assoc. Prof. Miao Wang and Prof. Shimin Hu. I received my B.Eng. degree at the Honors College of Beihang University, major in Computer Science. During that time, I was a research intern specializing in interaction and collaboration in virtual reality, under the guidance of Assoc. Prof. Miao Wang. My research interests lie primarily in computer graphics and 3D computer vision, mainly focusing on radiance fields. Besides, I also have a broad interest in virtual reality. |

|

|

|

|

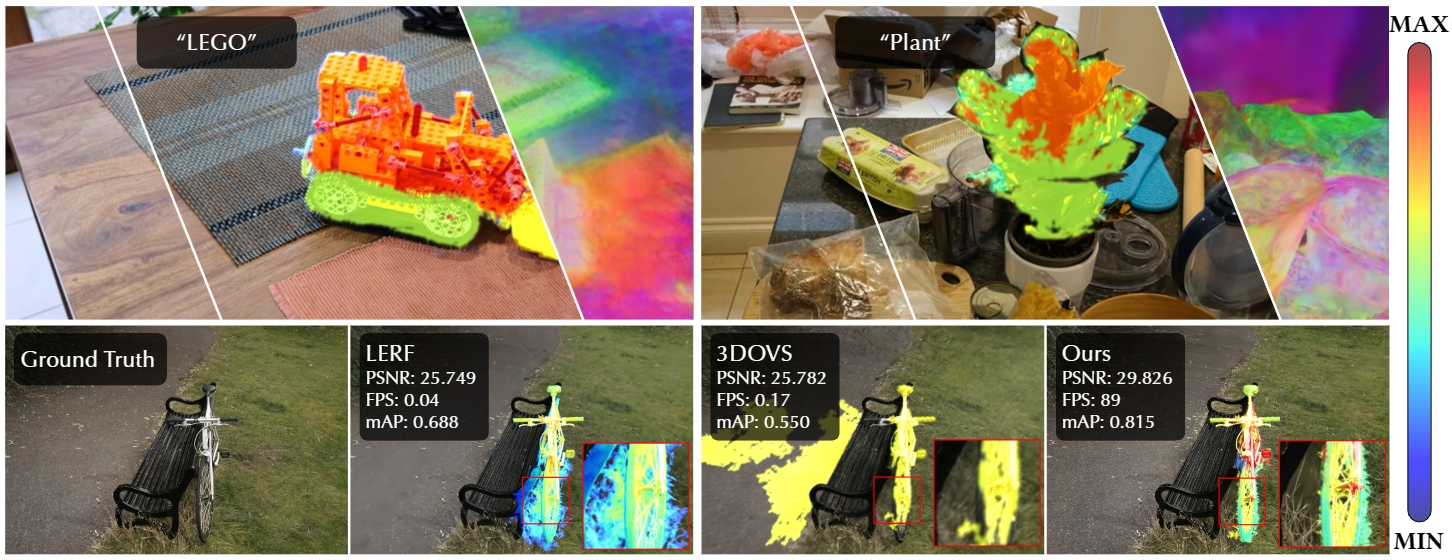

Jin-Chuan Shi, Miao Wang, Hao-Bin Duan, Shao-Hua Guan CVPR, 2024 Paper / Project Page / Code We present Language Embedded 3D Gaussians, a novel scene representation for efficient open-vocabulary query tasks in 3D space. It uses a memory-efficient quantization scheme and a novel embedding procedure to achieve high-quality query results, outperforming existing language-embedded representations in terms of visual quality and language querying accuracy, while maintaining real-time rendering on a single desktop GPU. |

|

|

Hao-Bin Duan, Miao Wang, Jin-Chuan Shi, Xu-Chuan Chen, Yan-Pei Cao ACM Transactions on Graphics (SIGGRAPH Asia), 2023 Paper / Project Page / Code We present BakedAvatar, a real-time neural head avatar synthesis method for VR/AR and gaming, which uses multi-layered meshes and baked textures to significantly reduce computational cost while maintaining high-quality results and interactive frame rates on various devices including mobiles. |

|

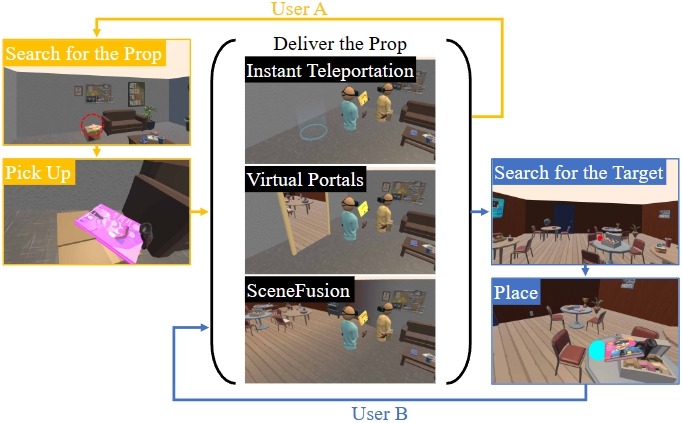

Miao Wang, Yi-Jun Li, Jin-Chuan Shi, Frank Steinicke IEEE Transactions on Visualization and Computer Graphics, 2023 Paper We present SceneFusion, an innovative navigation technique for efficient travel between virtual indoor scenes in VR environments, which fuses separate rooms to enhance visual continuity and spatial awareness, outperforming traditional teleportation and virtual portal methods in terms of efficiency, workload, and user preference in both single-user and multi-user scenarios. |

|

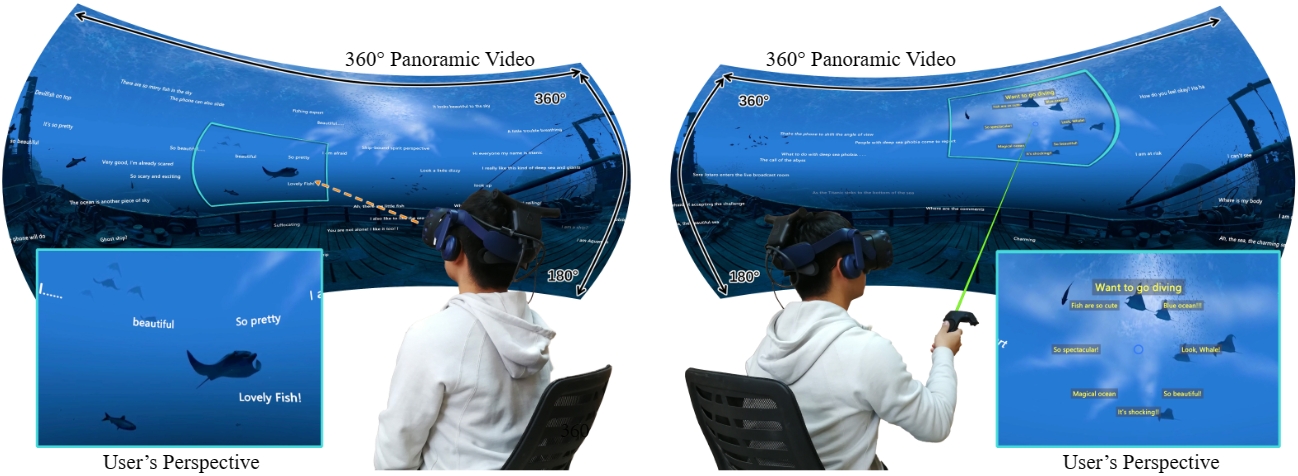

Yi-Jun Li, Jin-Chuan Shi, Fang-Lue Zhang, Miao Wang IEEE VR, 2022 Paper We explore bullet comment display and insertion in 360° video using head-mounted displays and controllers, evaluating various methods and discussing design insights. |

|

Miao Wang, Ziming Ye, Jin-Chuan Shi, Yong-Liang Yang IEEE VR, 2021 Paper We present an intelligent VR interior design tool that enhances object selection and movement by considering scene context, leading to improved user experience, as validated through various interaction modes and user studies. |

|

Last updated: Dec 2023

|